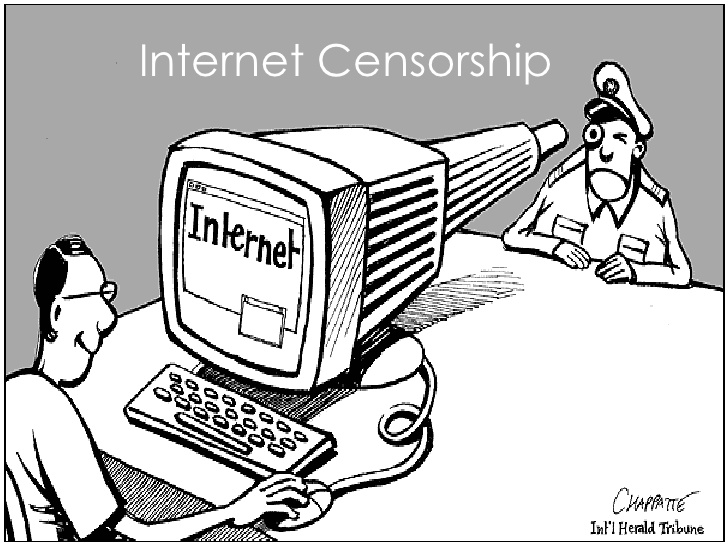

Speech regulations are always going to be either too loose or too strict, and users are always going to exploit them.

Regulating speech is difficult even under the best of conditions, and the internet is far from the best of conditions. Its patchwork system of regulation by private entities satisfies no one, yet it is likely to endure for the foreseeable future.

By way of explanation, consider a case in which authority is (mostly) centralized and the environment is (mostly) controlled: my own. I am a professor, and if a student regularly made offensive remarks in class, I would meet with that person and try to persuade him or her to desist. (For the record, this has never happened to me.) If the person continued, I would at some point seek to ban the student from my class, with the support of my university.

Yet this solution is not as straightforward as it seems. Maybe it’s good if you trust my judgment, but it is not readily scalable. It only works because such incidents are so rare. I can’t be effective in my job if I need to spend my time regulating and trying to modify the speech of my students.

And this approach becomes all the more unwieldy when adopted by internet platforms. Patreon is a crowd-funding site that has vowed to persuade and reform its patron-seeking users who engage in what it considers offensive behavior. If those users do not fall into line, as defined by the standards of Patreon, Patreon will ban them.

But outsiders (and some users) will never sufficiently trust the company’s judgment, whether Patreon has good judgment or not. Furthermore, the judgmental processes of the company are difficult to scale, because personal intervention is required in each case, and at some point the decisions will become bureaucratic. Over time, disputes over banned and non-banned users will distract Patreon from its core function of helping people raise money over the internet.

An alternative approach is simply to let anyone use the platform and not engage in much regulation of speech or users. A few years ago, private regulation was a much smaller issue than it is today, even if extremely offensive material posted on Facebook or YouTube often prompted a takedown order.

The problem with these systems is that they were too hospitable to bad actors. The Russian government, for instance, used multiple internet platforms to try to sway the 2016 U.S. presidential election. I agree with Nate Silver that such efforts did not have significant influence over the final outcome, but that is not the point here. If platforms are perceived as hospitable to bad actors, including enemies of America and democracy, those platforms will start to lose their legitimacy in the eyes of both the public and its elected representatives.

An alternative approach is for platform companies to regulate by algorithm. For instance, if a posting refers to Nazis and uses derogatory terms for Jews, the algorithm could ban those postings automatically. That may work fine at first, but eventually the offensive posters will figure out how to game the algorithms. Then there is the issue of false positives — postings that the algorithm identifies as offensive but aren’t. The upshot is that human judgment will remain a crucial supplement to any algorithm, which will have to be fine-tuned regularly.

As these processes evolve, each internet platform will not be consistent or fair across its many users, as some will get away with being offensive or dangerous, while others may be censored or kicked off for insufficient reasons. Facebook recently has devoted a lot of resources to regulating speech on its platform. Yet undesired uses of the platform hardly have gone away, especially outside the U.S. Furthermore, the need for human judgment makes algorithms increasingly costly and hard to scale. As Facebook grows bigger and reaches across more regions and languages, it becomes harder to find the humans who can apply what Facebook considers to be the proper standards. 1

I’d like to suggest a simple trilemma. When it comes to private platforms and speech regulation, you can choose two of three: scalability, effectiveness and consistency. You cannot have all three. Furthermore, this trilemma suggests that we — whether as users, citizens or indeed managers of the platforms themselves — won’t ever be happy with how speech is regulated on the internet.

One view, which may appear cynical, is that the platforms are worth having, so they should appease us by at least trying to regulate effectively, even though both of us know they won’t really succeed. Circa 2019, I don’t see a better solution. Another view is that we’d be better off with how things were a few years ago, when platform regulation of speech was not such a big issue. After all, we Americans don’t flip out when we learn that Amazon sells copies of “Mein Kampf.”

The problem is that once you learn about what you can’t have — speech regulation that is scalable, consistent and hostile to bad agents — it is hard to get used to that fact. Going forward, we’re likely to see platform companies trying harder and harder, and their critics getting louder and louder.

- Disclaimer: Facebook has been a donor to my university and research center (Mercatus).

This column does not necessarily reflect the opinion of the editorial board or Bloomberg LP and its owners.

To contact the author of this story:

Tyler Cowen at tcowen2@bloomberg.net

To contact the editor responsible for this story:

Michael Newman at mnewman43@bloomberg.net

Leave a Reply